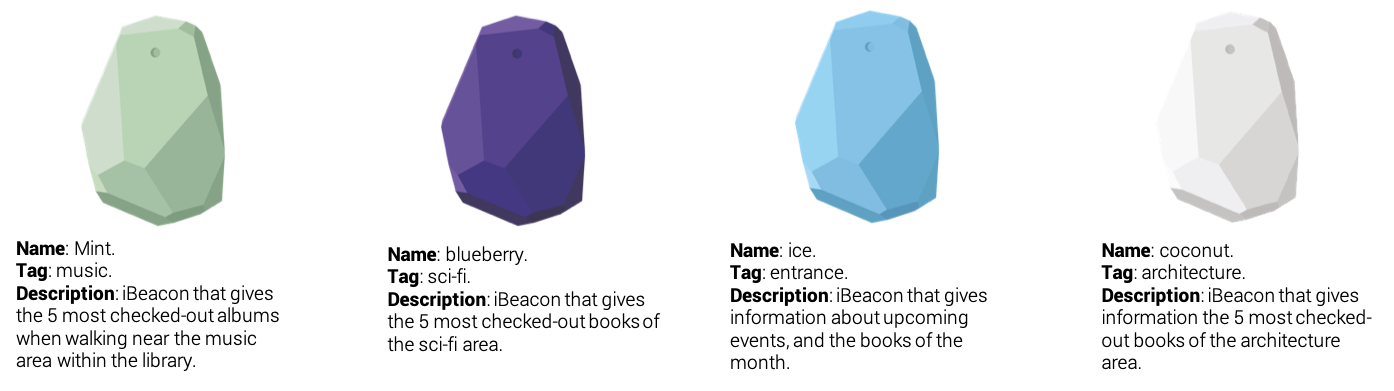

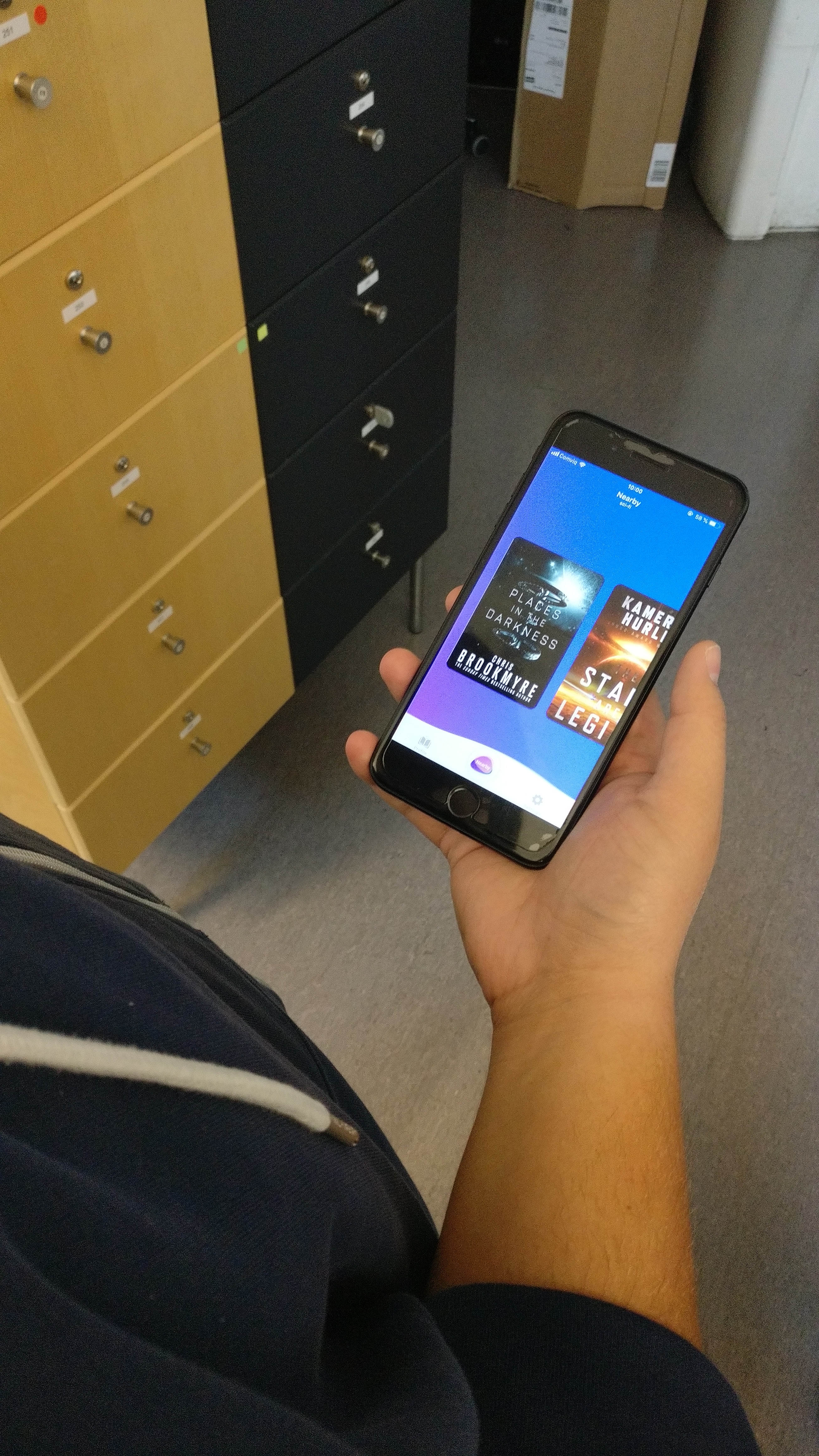

It's an app that aids the user in finding relevant content depending on their physical location in a library through the proximity beacons placed in each section. With this app the library is able to promote specific books or themes and the users are able to find books that might be new for them.

The team was formed by 2 back-end, 2 front end, and 2 designers. We (designers) decided to do some user research through surveys and interviews to library users, to better understand needs and adjust the requirements given if needed.

I created a journey map with the gathered information, with these we had a workshop where we had a brainstorming, and sketching session. The requirements were revised and the functionalities agreed with the developers. I designed on interactive prototypes that were used in user interviews for feedback on both functionality and usability.

From the insights and feedback a new version was made with refined interactions and animations. The high fidelity prototype was presented to the developers to work with.

We then performed usability tests with the app, we also used the think-aloud method for further insights.

The main methods I used for this project were:

The tools I used for this project were: